I have been thinking about how to do this, the only way I can come up with is to sequentially post each frame here.

I have spent over 2 days analyizing this movie, have a look at the below screen shot:

So Semper Fi is standing on the left side of Ross in this view, the lady with the blue shorts bumps into Semper Fi and he get’s into the camera of Ross at the moment of the first shot. He moves to the right side of Ross… This all adds up, unless redcap is making the movie…

Here is the interview:

Ross claims in this video that: “one officer did try to climb up the building…” now that the body cam has been released, it is impossible for Ross to have seen this police guy. His story does not add up…

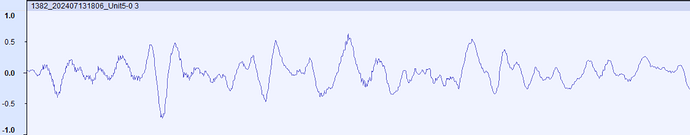

The Police Cruiser audio data has Shots 1-8 looking suspiciously similar when viewed in Audacity. Was this a cut-and paste job? I decided to take a close look at the data in Matlab.

ffmpeg says that the audio channels in the Cruiser MP4 file were all AAC format, so I extracted the first 7 minutes of the Channel 3 audio file like this:

ffmpeg -i “Police Cruiser 1382_202407131806_Unit5-0.mp4” -t 7:00 -map 0:3 -vn -acodec copy cruiser.aac

Then I pulled the data into Matlab:

[a,Fs] = audioread(‘…\Peak Prosperity\cruiser.aac’);

The first 8 shots are plotted here:

Cruiser CH3 Shots 1-8.pdf (3.4 MB)

Flip through them and see what you all think.

- Fake

- Real

- Unsure

Thank you @kwaka. I downloaded 10 frames. Not sure about the naming convention, so I just named them 1-10.

If anyone sets up a poll with a time limit, be aware that the date/time are GMT, not your local time. I had planned to keep that poll open for 4 hours to give time for more people to see it. Sorry.

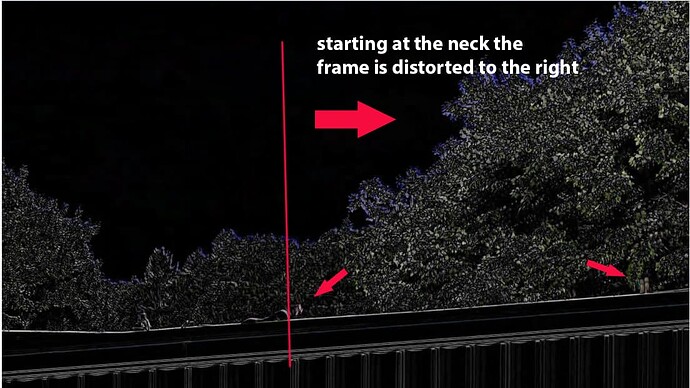

Sorry, I don’t agree, we are talking about distortion exactly at the position of Crooks neck, after four weeks of people complaining that there was no recoil of the riffle. If you mount the video back to an animated gif and set the head as the fixed point, it will move the body backwards, simulating the recoil. This seems to be precise human intervention trying to add the recoil of the riffle in this new version from last Sunday.

Geee. We learnt to use autocorrelation, theoretically. But this is a digitalized sample sequence and with natural sound the phases not necessarily match.

Thank you, I hope I can find time tonight or tomorrow to go through these in detail. Here are my initial thoughts.

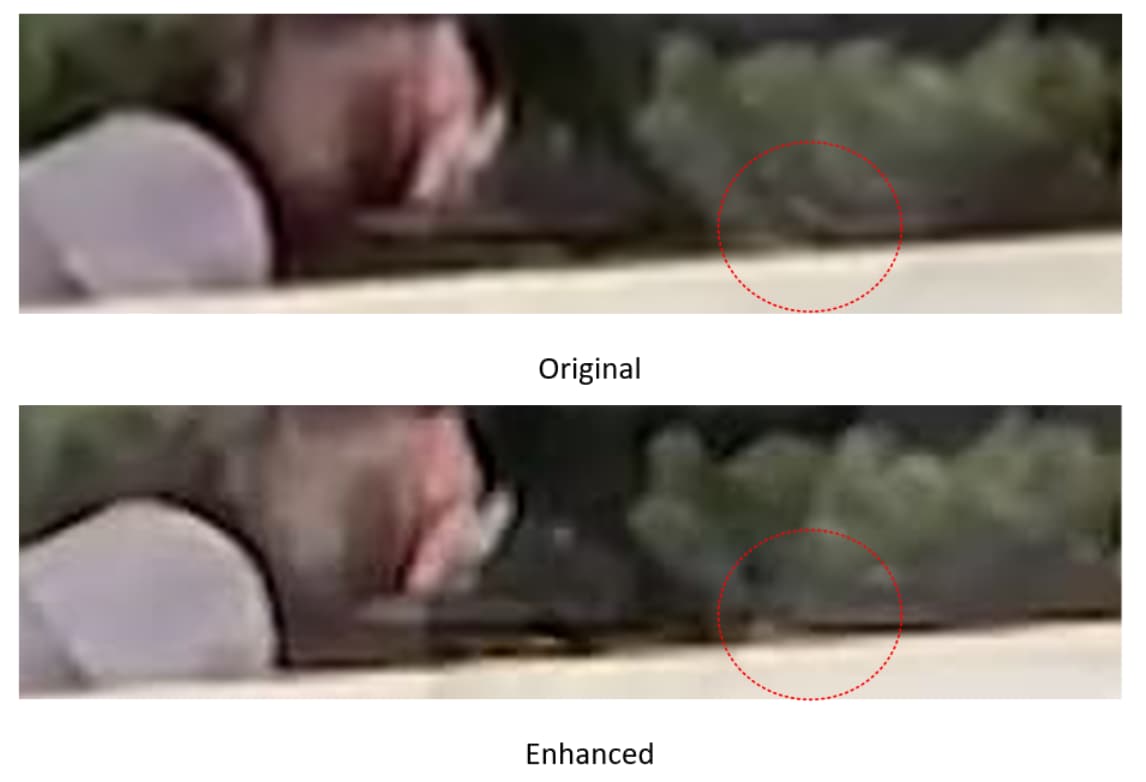

a) It doesn’t look like TMZ “found” more data in the file, it looks like they applied an unsharp mask or some similarly primitive sharpening filter to the original. Filters can’t create data. In the case of sharpening filters, they typically work by creating overshoot in the response at edges, which tricks our eyes into thinking something is sharper. Where there is inherent blockiness, this creates artifacts, like all the little rough texture in the grey shirt at its top/front edge.

b) a sharpening filter will never remove a real feature, it will just make it look “sharper.” In the crops below, look at the spot a few inches in front of Crooks’ glasses. It is there in the original, it is just blockier in the enhanced version.

c) now look at the diagonal feature I have put a red circle around in the original. It is more prominent than that little speck in front of Crooks’ eye. I think it is either part of the barrel or maybe Crooks’ thumb. Who knows, doesn’t matter. But when you look at the “enhanced” version it is gone, obliterated, see ya. Replaced with grey mush. A “sharpened” or “enhanced” filter response wouldn’t do this, it would make it appear more prominent. This has all the fingerprints of a manipulation.

d) which brings me to my last point. That SAME diagonal feature is prominently highlighted in the next frame, and looks like the last frame of a tumbling casing to our easy-to-fool eyes (it was “missing” from the prior frame"). But if it was there in the frame I had highlighted, the “casing” would seem to have jerked downwards suddenly and unnaturally, and then hovered, which isn’t anything like a falling casing. So they took the feature which clearly isn’t a falling casing out of the first of the two frames and left it in the second.

Video coders are strange beasts and no one can ever know for sure after the fact what the original data was. But the rest of the local area of this frame looks like a sharpened version of the original, so we have to ask why did the higher-res version drop this oh-so-crucial feature?

What is the wave length of 1 kHz sound? Is it about a foot?

Barricaded sound propagation is something wave mechanics.

Is the Huygens-Fresnel principle applicable for sounds?

Could you make a version of this with just the first four waveforms? After that, the energy of overlapping shots (whether real or pasted) starts to overlap…

I just exported the pdf pages and put together into animated gif.

Yeah, that is along the lines what I’ve written right now – except for the scaling, because R has a builtin correlation function. But I still thank you for the algorithm, also because I just learned how a correlation function works at its heart. Yet testing the script is rather slow, even though I used the 10 interesting seconds only (at a sample rate of 48 kHz) and a sample of 0.2 s taken from the first shot.

This is truly weird. Perfectly sinusoidal noiseless waveforms with the same peaks and zero crossings. It’s like someone generated these synthetically by “randomly” slightly tweaking the amplitude of one or more frequency coefficients.

I have lot of questions in my head. How to explain briefly…

In some other field it is possible to study the correlation between regions of same wavelength. So called region size of compatible wavelength.

What does it mean? At the given frequency 1 period sine should taken, and, let’s say, project the sample to that base (in Hilbert-space). And we might correlate those regions. Now it is a function of time delay and wavelength. (Actually it is a possible/promising theory of quantum gravity. (Hooks of space.)) Haven’t I missed the phase? Then sine and cosine both needed.

VLC plaer was unable to export raw audio. It is not pure PCM(1) but some compressed(80). I should install ffmpeg.

1-2-3

4-5-6-7-8

Meanwhile I try to understand how the window glass attenuates the sound. Unfortunatelly there is no Lagrangian function of the dry Coulomb friction, so it is weird to describe with a row of atomic level dissipative harmonic oscillators.

When you look at how the cruiser recorded shots 9 and 10, it didn’t come close to killing high frequencies. Here is shot 10, same channel Greg just captured:

Is this the same as the “later/edited” file?

Hey guys.

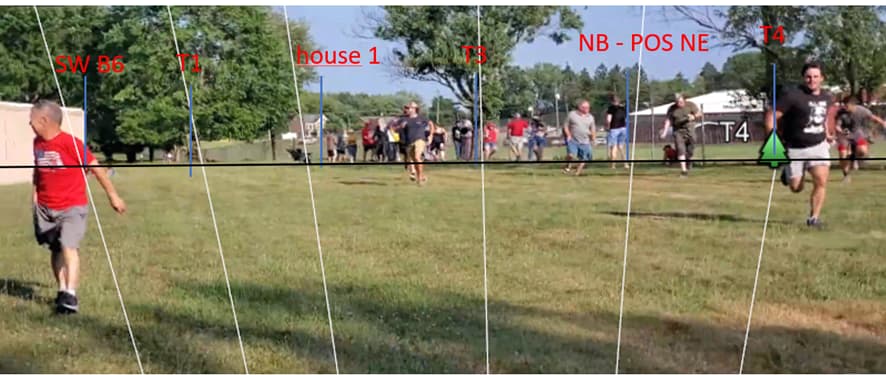

I been trying to improve my method for geolocating the footages.

My original method consisted in defining objects on the Google Earth, defining a possible location of the footage, inserting the image on the map to fit all the points. After I try to adjust the location of footage to better fit the image.

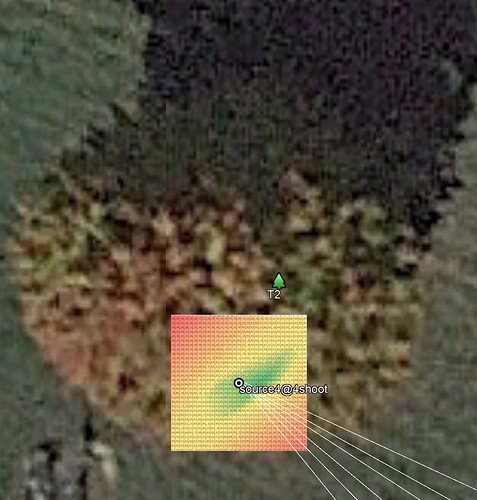

Here is the footage from source 4 at shoot 4, with the lines of sight (in white) of each reference point, reference positions (in blue) and the name (in red):

The match is not perfect. So, I’m tring to use math to solve the error of position predicted from the measured in the footage in pixels.

The angles of the reference geolocations to the footage geolocation are measured then converted to pixels, and then measured the error to actual footage, in the original location I got a medium error of 33 pixels, for a total lentgh of the footage of 3264 pixels. Then I run a test for various locations near my first guess. The result is a map of error in pixels, the green region is the location with minimal error.

The best location is 0.67m to the East of my original location, reducing the medium error from 32 pixels to 27 pixels. That is pretty good. But I’m still trying to improve my method. If anyone want to give me an insight thanks in advance.

Here are the locations used, and the pixels measured in the footage from source 4 at 21.556sec:

| REF | E | N | pixel length |

|---|---|---|---|

| S4 AT 4S | 586683.23 | 4523537.98 | na |

| SW B6 | 586735.97 | 4523514.77 | 362 |

| T1 | 586774.67 | 4523488.78 | 747 |

| house 1 | 587071.96 | 4523293.80 | 1216 |

| T3 | 586728.02 | 4523501.77 | 1774 |

| NB - POS NE | 586815.38 | 4523406.93 | 2306 |

| T4 | 586706.91 | 4523509.66 | 2871 |